一、介绍

1.1、简介

什么是redis集群:

由于数据量过大,单个Master复制集难以承担,因此需要对多个复制集进行集群,形成水平扩展每个复制集只负责存储整个数据集的一部分,这就是Redis的集群,其作用是提供在多个Redis节点间共享数据的程序集。

功能:

redis集群支持多个master,每个master又可以挂在多个slave。具有读写分离、数据高可用的效果。

由于集群自带了哨兵的故障转移机制,内置了高可用的支持,所以使用集群无需再去使用哨兵功能。

客户端与redis的节点连接,不再需要连接集群中所有的节点,只需要任意连接集群中的一个可用节点即可。

槽位slot负责分配到各个物理服务节点,由对应的集群来负责维护节点,插槽和数据之间的关系。

Redis 集群的数据分片:

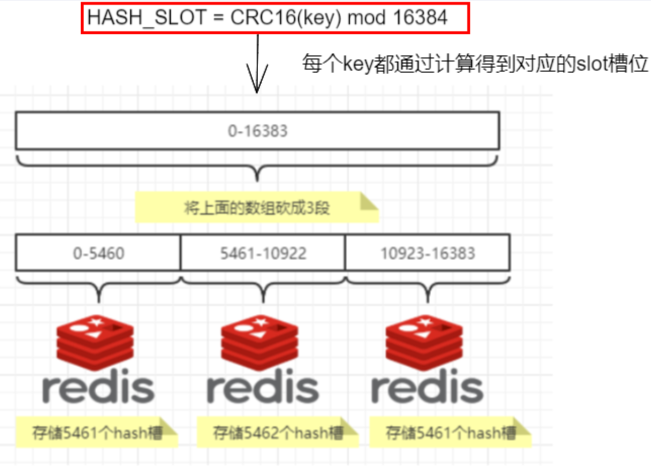

Redis 集群没有使用一致性hash,而是引入哈希槽的概念。Redis 集群有16384个哈希槽,每个key通过CRC16校验后对16384取模来决定放置哪个槽,集群的每个节点负责部分hash槽,举个例子,比如当前集群有3个节点,那么:

分片是什么:

使用Redis集群时我们会将存储的数据分散到多台redis机器上,这称为分片。简言之,集群中的每个Redis实例都被认为是整个数据的一个分片。

如何找到给定key的分片:

为了找到给定key的分片,我们对key进行CRC16(key)算法处理并通过对总分片数量取模。然后,使用确定性哈希函数,这意味着

给定的key将多次始终映射到同一个分片,我们可以推断将来读取特定key的位置。

1.2、分片与槽位slot的优势

最大优势,方便扩缩容和数据分派查找:

这种结构很容易添加或者删除节点,比如如果我想新添加个节点D,我需要从节点 A,B,C中得部分槽到D上,如果我想移除节点A,需要将A中的槽移到B和C节点上,然后将没有任何槽的A节点从集群中移除即可,由于从一个节点将哈希槽移动到另一个节点并不会停止服务,所以无论添加删除或者改变某个节点的哈希槽的数量都不会造成集群不可用的状态。

1.3、为什redis集群的最大槽数时16384个

正常的心跳数据包带有节点的完整配置,可以用幂等方式用旧的节点替换旧节点,以便更新旧的配置。

这意味着它们包含原始节点的插槽配置,该节点使用2k的空间和16k的插槽,但是会使用8k的空间(使用65k的插槽)。

同时,由于其他设计折衷,Redis集群不太可能扩展到1000个以上的主节点。

因此16k处于正确的范围内,以确保每个主机具有足够的插槽,最多可容纳1000个矩阵,但数量足够少,可以轻松地将插槽配置作为原始位图传播。请注意,在小型群集中,位图将难以压缩,因为当N较小时,位图将设置的slot / N位占设置位的很大百分比。

(1)如果槽位为65536,发送心跳信息的消息头达8k,发送的心跳包过于庞大。

在消息头中最占空间的是myslots[CLUSTER_SLOTS/8]。 当槽位为65536时,这块的大小是: 65536÷8÷1024=8kb

在消息头中最占空间的是myslots[CLUSTER_SLOTS/8]。 当槽位为16384时,这块的大小是: 16384÷8÷1024=2kb

因为每秒钟,redis节点需要发送一定数量的ping消息作为心跳包,如果槽位为65536,这个ping消息的消息头太大了,浪费带宽。

(2)redis的集群主节点数量基本不可能超过1000个。

集群节点越多,心跳包的消息体内携带的数据越多。如果节点过1000个,也会导致网络拥堵。因此redis作者不建议redis cluster节点数量超过1000个。 那么,对于节点数在1000以内的redis cluster集群,16384个槽位够用了。没有必要拓展到65536个。

(3)槽位越小,节点少的情况下,压缩比高,容易传输

Redis主节点的配置信息中它所负责的哈希槽是通过一张bitmap的形式来保存的,在传输过程中会对bitmap进行压缩,但是如果bitmap的填充率slots / N很高的话(N表示节点数),bitmap的压缩率就很低。 如果节点数很少,而哈希槽数量很多的话,bitmap的压缩率就很低。

1.4、槽位映射的发展

第一阶段:哈希取余分区

2亿条记录就是2亿个Kv,我们单机不行必须要分布式多机,假设有3台机器构成一个集群,用户每次读写操作都是根据公式hash(key)% N个机器台数,计算出哈希值,用来决定数据映射到哪一个节点上。

优点:

简单粗暴,直接有效,只需要预估好数据规划好节点,例如3台、8台、10台,就能保证一段时间的数据支撑。使用Hash算法让固定的-到同一台服务器上,这样每台服务器固定处理一部分请求(并维护这些请求的信息),起到负载均衡+分而治之的作用。

缺点:

原来规划好的节点,进行扩容或者缩容就比较麻烦了额,不管扩缩,每次数据变动导致节点有变动,映射关系需要重新进行计算,在用定不变时没有问题,如果需要弹性扩容或故障停机的情况下,原来的取模公式就会发生变化:Hash(key)/3会变成Hash(key)/?。此时地址算的结果将发生很大变化,根据公式获取的服务器也会变得不可控。某个redis机器宕机了,由于台数数量变化,会导致hash取余全部数据重新洗牌。

第二阶段:一致性哈希算法分区

为了在节点数目发生改变时尽可能少的迁移数据

将所有的存储节点排列在收尾相接的Hash环上,每个key在计算Hash后会顺时针找到临近的存储节点存放。

而当有节点加入或退出时仅影响该节点在Hash环上顺时针相邻的后续节点。

优点:

加入和删除节点只影响哈希环中顺时针方向的相邻的节点,对其他节点无影响

缺点:

数据的分布和节点的位置有关,因为这些节点不是均匀的分布在哈希环上的,所以数据在进行存储时达不到均匀分布的效果。

第三阶段:哈希槽分区

1、为什么出现

致性哈希算法的数据倾斜问题

哈希槽实质就是一个数组,数组[0,2^14-1]形成hash slot空间。

2、能干什么

解决均匀分配的问题,在数据和节点之间又加入了一层,把这层称为哈希槽(slot) ,用于管理数据和节点之间的关系,现在就相当于节点上放的是槽,槽里放的是数据。

槽解决的是粒度问题,相当于把粒度变大了,这样便于数据移动。哈希解决的是映射问题,使用key的哈希值来计算所在的槽,便于数据分配

3、多少个hash槽

一个集群只能有16384个槽,编号0-16383(0-2^14-1)。这些槽会分配给集群中的所有主节点,分配策略没有要求。集群会记录节点和槽的对应关系,解决了节点和槽的关系后,接下来就需要对key求哈希值,然后对16384取模,余数是几kev就落入对应的槽里。HASH SLOT=CRC16(key) mod 16384。以槽为单位移动数据,因为槽的数目是固定的,处理起来比较容易,这样数据移动问题就解决了。

Redis集群中内置了16384个哈希槽,redis会根据节点数量大致均等的将哈希槽映射到不同的节点。当需要在 Redis集群中放置一个key-value时,redis先对key使用crc16算法算出一个结果然后用结果对16384求余数[CRC16(key)% 16384],这样每个key都会对应一个编号在 0-16383之间的哈希槽,也就是映射到某个节点上。如下代码,key之A、B在Node2,key之C落在Node3上。

二、配置集群

前置条件:

三台虚拟机,各自新建:mkdir-p /myredis/cluster

配置6个独立redis服务,配置文件展示:

bind 0.0.0.0

daemonize yes

protected-mode no

port 6381

logfile "/myredis/cluster/cluster6381.log"

pidfile /myredis/cluster6381.pid

dir /myredis/cluster

dbfilename dump6381.rdb

appendonly yes

appendfilename "appendonly6381.aof"

requirepass 111111

masterauth 111111

cluster-enabled yes

cluster-config-file nodes-6381.conf

cluster-node-timeout 5000

配置如上,记得修改端口。

遇到的报错:

[root@192 cluster]# redis-server /myredis/cluster/redis-6381.conf

*** FATAL CONFIG FILE ERROR (Redis 7.0.0) ***

replicaof directive not allowed in cluster mode

哨兵在配置文件中追加了replicaof相关的内容,将哨兵追加的内容删除后重新启动。

三、构建集群

[root@192 cluster]# redis-cli -a 111111 --cluster create --cluster-replicas 1 192.168.1.11:6381 192.168.1.11:6382 192.168.1.12:6383 192.168.1.12:6384 192.168.1.13:6385 192.168.1.13:6386 # 1输入命令

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.168.1.12:6384 to 192.168.1.11:6381

Adding replica 192.168.1.13:6386 to 192.168.1.12:6383

Adding replica 192.168.1.11:6382 to 192.168.1.13:6385

M: 491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381

slots:[0-5460] (5461 slots) master

S: cc9a9f6bad23314263e2b89e4beede4fc59c3ef3 192.168.1.11:6382

replicates 95d8800931e38739a85d6face5296d719a8d5bfa

M: 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383

slots:[5461-10922] (5462 slots) master

S: 4a60743d7b067dfd03c6a0d4403c88ce173abb1c 192.168.1.12:6384

replicates 491bb2df7fa39bafd917f7aaa0a409e19e41b07f

M: 95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385

slots:[10923-16383] (5461 slots) master

S: 458cf2edf6cc3005cee4fc3aa93278efa86637ec 192.168.1.13:6386

replicates 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

Can I set the above configuration? (type 'yes' to accept): yes #2输入yes确认

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

>>> Performing Cluster Check (using node 192.168.1.11:6381)

M: 491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 458cf2edf6cc3005cee4fc3aa93278efa86637ec 192.168.1.13:6386

slots: (0 slots) slave

replicates 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

S: cc9a9f6bad23314263e2b89e4beede4fc59c3ef3 192.168.1.11:6382

slots: (0 slots) slave

replicates 95d8800931e38739a85d6face5296d719a8d5bfa

M: 95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 4a60743d7b067dfd03c6a0d4403c88ce173abb1c 192.168.1.12:6384

slots: (0 slots) slave

replicates 491bb2df7fa39bafd917f7aaa0a409e19e41b07f

M: 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

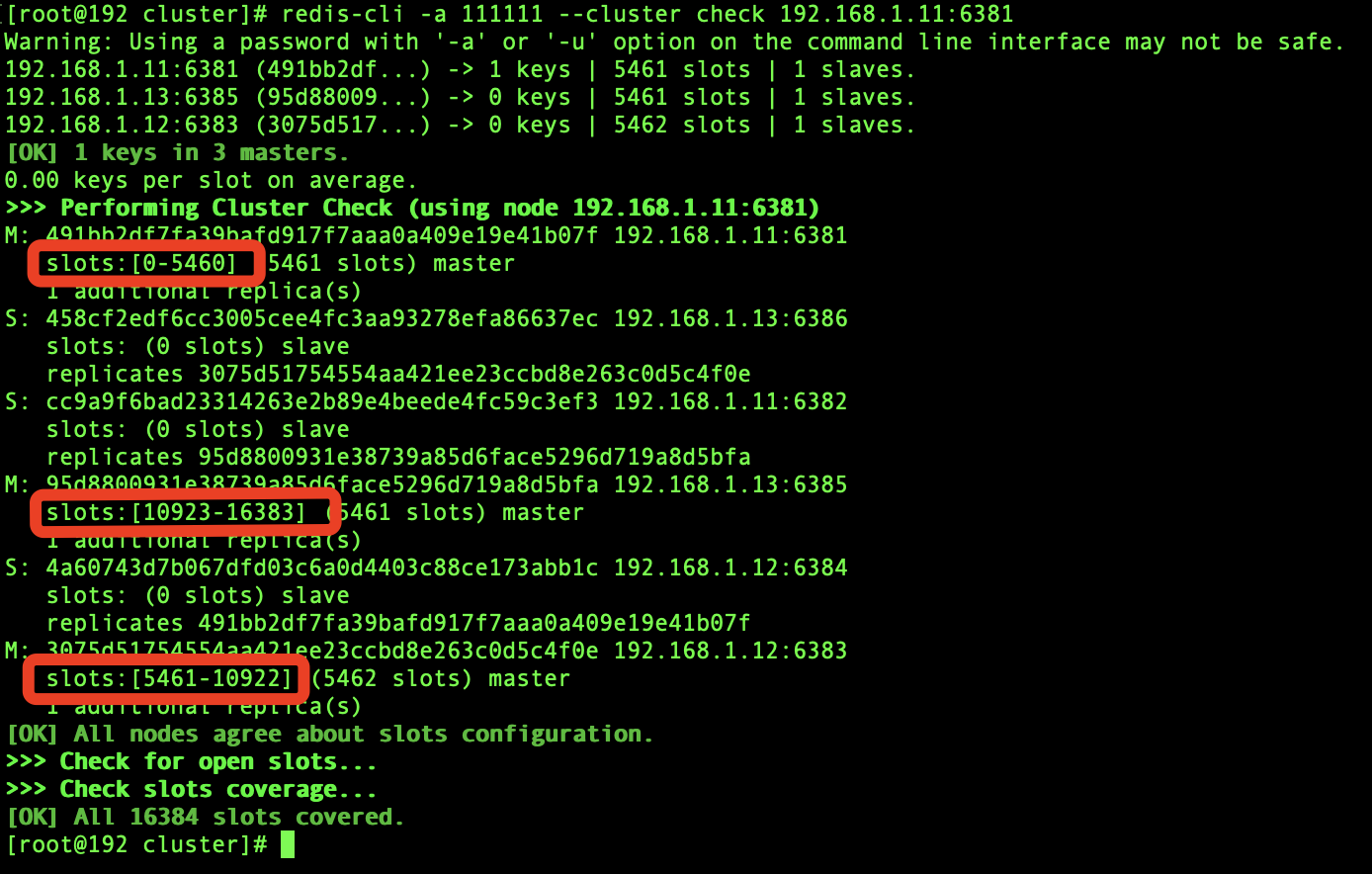

[OK] All 16384 slots covered. ##3到这里构建成功。三主三从

[root@192 cluster]#

1638个槽位分别对应哪几个主机

四、场景演示

127.0.0.1:6381> cluster keyslot k1 # 查看“k1”这个key对应的槽位

(integer) 12706 # 12706对应的是6385这台主机

在6381上set k1 v1

127.0.0.1:6381> set k1 v1

(error) MOVED 12706 192.168.1.13:6385 ###报错

127.0.0.1:6381>

报错原因:需要路由到位

如何解决:连接redis时增加-c参数,优化路由

[root@192 cluster]# redis-cli -a 111111 -p 6381 -c ###连接时添加-c优化路由

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6381> set k1 v1 # set成功

-> Redirected to slot [12706] located at 192.168.1.13:6385

OK

4.1、容错测试:6381这台master挂了,对应的slave是否会变成master

1、查看关系

127.0.0.1:6381> info replication # 查看关系

# Replication

role:master

connected_slaves:1

slave0:ip=192.168.1.12,port=6384,state=online,offset=17286,lag=0 #6384为6381的slave

master_failover_state:no-failover

master_replid:4d3c3673de89c3c84a199d59c70ae6cf245742fc

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:17286

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:17286

2、shutdown 6381

127.0.0.1:6381> shutdown

not connected> quit

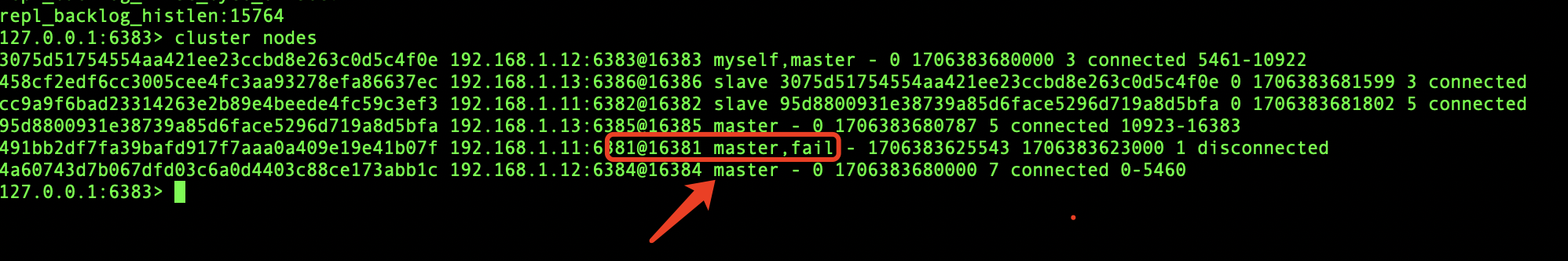

3、登录集群内任意主机,执行cluster nodes命令查看集群关系:6381已经fail,6384成为新的master

4.2、6381重新登录之后,是成为master还是slave?--slave身份

[root@192 cluster]# redis-server redis-6381.conf # 启动6381

[root@192 cluster]# redis-cli -a 111111 -p 6381 -c @登录

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6381> cluster nodes # 查看集群关系

3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383@16383 master - 0 1706383916749 3 connected 5461-10922

4a60743d7b067dfd03c6a0d4403c88ce173abb1c 192.168.1.12:6384@16384 master - 0 1706383917558 7 connected 0-5460

95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385@16385 master - 0 1706383917558 5 connected 10923-16383

491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381@16381 myself,slave 4a60743d7b067dfd03c6a0d4403c88ce173abb1c 0 1706383917000 7 connected # 6381还是slave

458cf2edf6cc3005cee4fc3aa93278efa86637ec 192.168.1.13:6386@16386 slave 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 0 1706383916000 3 connected

cc9a9f6bad23314263e2b89e4beede4fc59c3ef3 192.168.1.11:6382@16382 slave 95d8800931e38739a85d6face5296d719a8d5bfa 0 1706383917759 5 connected

4.3、有主机宕机后,集群的关系与当初设计时不一致了。关系被打乱。如何恢复?

以当前为例。6381的master被6384顶替了。需要登录6381之后命令:cluster failover

127.0.0.1:6381> cluster failover # 将当前主机置为master

OK

127.0.0.1:6381> cluster nodes

3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383@16383 master - 0 1706384388767 3 connected 5461-10922

4a60743d7b067dfd03c6a0d4403c88ce173abb1c 192.168.1.12:6384@16384 slave 491bb2df7fa39bafd917f7aaa0a409e19e41b07f 0 1706384389000 8 connected

95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385@16385 master - 0 1706384389573 5 connected 10923-16383

491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381@16381 myself,master - 0 1706384389000 8 connected 0-5460

458cf2edf6cc3005cee4fc3aa93278efa86637ec 192.168.1.13:6386@16386 slave 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 0 1706384388057 3 connected

cc9a9f6bad23314263e2b89e4beede4fc59c3ef3 192.168.1.11:6382@16382 slave 95d8800931e38739a85d6face5296d719a8d5bfa 0 1706384389775 5 connected

4.4、扩容与缩容测试---扩容

1、复制两份配置文件,分别将端口号替换为6387和6388

2、启动两个新节点实例,此时他们都是master

[root@192 cluster]# redis-server redis-6387.conf # 启动两个实例

[root@192 cluster]# redis-server redis-6388.conf

[root@192 cluster]# redis-cli -a 111111 -p 6387 # 登录一个做演示

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6387> info replication # 查看关系

# Replication

role:master ###master

connected_slaves:0

master_failover_state:no-failover

master_replid:ccf0e3ef0a7ea4c965d4f1298518686b5e748a7c

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:0

second_repl_offset:-1

repl_backlog_active:0

repl_backlog_size:1048576

repl_backlog_first_byte_offset:0repl_backlog_histlen:0

3、将新增的6387(空槽号)作为master节点加入原来的集群:命令:redis-cli -a 密码 --cluster add-node 要加入的节点ip:端口 已经在集群中的节点ip:端口(相当于介绍人)

[root@192 cluster]# redis-cli -a 111111 --cluster add-node 192.168.1.13:6387 192.168.1.11:6381

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Adding node 192.168.1.13:6387 to cluster 192.168.1.11:6381

>>> Performing Cluster Check (using node 192.168.1.11:6381)

M: 491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 4a60743d7b067dfd03c6a0d4403c88ce173abb1c 192.168.1.12:6384

slots: (0 slots) slave

replicates 491bb2df7fa39bafd917f7aaa0a409e19e41b07f

M: 95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 458cf2edf6cc3005cee4fc3aa93278efa86637ec 192.168.1.13:6386

slots: (0 slots) slave

replicates 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

S: cc9a9f6bad23314263e2b89e4beede4fc59c3ef3 192.168.1.11:6382

slots: (0 slots) slave

replicates 95d8800931e38739a85d6face5296d719a8d5bfa

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Getting functions from cluster

>>> Send FUNCTION LIST to 192.168.1.13:6387 to verify there is no functions in it

>>> Send FUNCTION RESTORE to 192.168.1.13:6387

>>> Send CLUSTER MEET to node 192.168.1.13:6387 to make it join the cluster.

[OK] New node added correctly.

[root@192 cluster]#

4、检查集群的情况

5、给新的节点匀一些槽号。命令:redis-cli -a 密码 --cluster reshard IP地址:端口号

[root@192 cluster]# redis-cli -a 111111 --cluster reshard 192.168.1.11:6381 # ip端口为集群内的任意实例

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing Cluster Check (using node 192.168.1.11:6381)

M: 491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283 192.168.1.13:6387

slots: (0 slots) master

S: 4a60743d7b067dfd03c6a0d4403c88ce173abb1c 192.168.1.12:6384

slots: (0 slots) slave

replicates 491bb2df7fa39bafd917f7aaa0a409e19e41b07f

M: 95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 458cf2edf6cc3005cee4fc3aa93278efa86637ec 192.168.1.13:6386

slots: (0 slots) slave

replicates 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

S: cc9a9f6bad23314263e2b89e4beede4fc59c3ef3 192.168.1.11:6382

slots: (0 slots) slave

replicates 95d8800931e38739a85d6face5296d719a8d5bfa

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 4096 ###匀多少槽号,案例匀了4096个

What is the receiving node ID? 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283 ###匀给谁,这里需要填目标节点ID(6387的ID)

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all ###这里填all

Ready to move 4096 slots.

Source nodes:

M: 491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

Destination node:

M: 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283 192.168.1.13:6387

slots: (0 slots) master

Resharding plan:

Moving slot 5461 from 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

Moving slot 5462 from 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

Moving slot 5463 from 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

Moving slot 5464 from 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

.......等

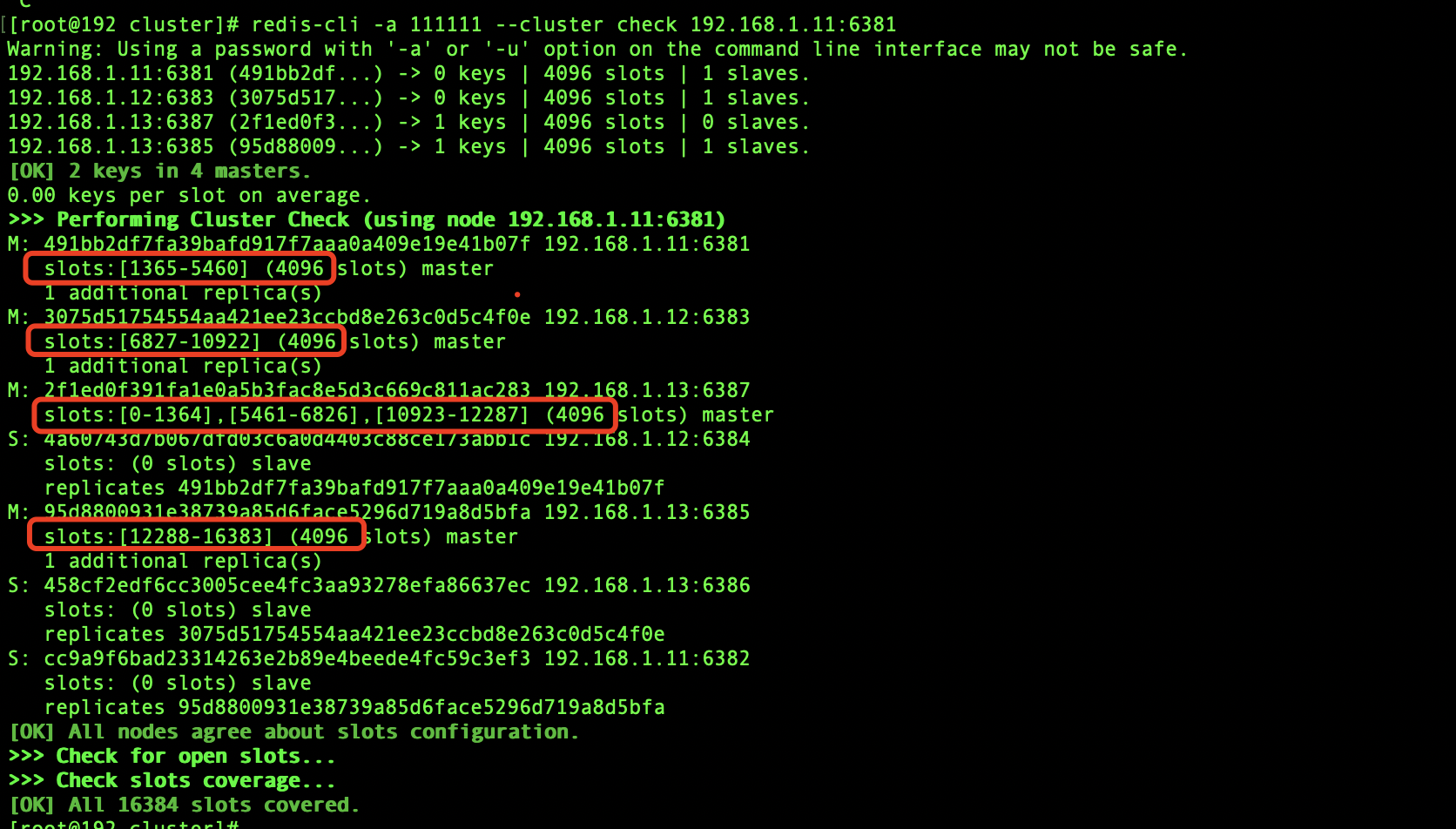

6、再次查看集群情况

问题:为什么6387是3个新的区间,以前的还是连续?

重新分配成本太高,所以前3家各自匀出来一部分,从6381/6383/6385三个旧节点分别匀出1364个坑位给新节点6387

7、为6387分配从节点6388。命令:redis-cli -a 密码 --cluster add-node ip:新slave端口 ip:新master端口 --cluster-slave --cluster-master-id 新master主机节点ID

[root@192 cluster]# redis-cli -a 111111 --cluster add-node 192.168.1.13:6388 192.168.1.13:6387 --cluster-slave --cluster-master-id 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Adding node 192.168.1.13:6388 to cluster 192.168.1.13:6387

>>> Performing Cluster Check (using node 192.168.1.13:6387)

M: 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283 192.168.1.13:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

M: 491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

M: 95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

S: cc9a9f6bad23314263e2b89e4beede4fc59c3ef3 192.168.1.11:6382

slots: (0 slots) slave

replicates 95d8800931e38739a85d6face5296d719a8d5bfa

M: 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: 4a60743d7b067dfd03c6a0d4403c88ce173abb1c 192.168.1.12:6384

slots: (0 slots) slave

replicates 491bb2df7fa39bafd917f7aaa0a409e19e41b07f

S: 458cf2edf6cc3005cee4fc3aa93278efa86637ec 192.168.1.13:6386

slots: (0 slots) slave

replicates 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.1.13:6388 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 192.168.1.13:6387.

[OK] New node added correctly.

[root@192 cluster]#

8、第三次检查集群情况

4.5、扩容与缩容测试---缩容

1、先查看集群的情况,获得6388的ID

[root@192 cluster]# redis-cli -a 111111 --cluster check 192.168.1.13:6387

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

192.168.1.13:6387 (2f1ed0f3...) -> 1 keys | 4096 slots | 1 slaves.

192.168.1.11:6381 (491bb2df...) -> 0 keys | 4096 slots | 1 slaves.

192.168.1.13:6385 (95d88009...) -> 1 keys | 4096 slots | 1 slaves.

192.168.1.12:6383 (3075d517...) -> 0 keys | 4096 slots | 1 slaves.

[OK] 2 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.168.1.13:6387)

M: 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283 192.168.1.13:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

1 additional replica(s)

S: cc9a9f6bad23314263e2b89e4beede4fc59c3ef3 192.168.1.11:6382

slots: (0 slots) slave

replicates 95d8800931e38739a85d6face5296d719a8d5bfa

M: 491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

M: 95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

S: 458cf2edf6cc3005cee4fc3aa93278efa86637ec 192.168.1.13:6386

slots: (0 slots) slave

replicates 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

S: b01ff575c1e793617332d8786c61bb2a3d1bd290 192.168.1.13:6388

slots: (0 slots) slave

replicates 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283

S: 4a60743d7b067dfd03c6a0d4403c88ce173abb1c 192.168.1.12:6384

slots: (0 slots) slave

replicates 491bb2df7fa39bafd917f7aaa0a409e19e41b07f

M: 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@192 cluster]#

2、删除6388节点。命令:redis-cli -a 密码 --cluster del-node 被删ip:端口 被删节点ID

[root@192 cluster]# redis-cli -a 111111 --cluster del-node 192.168.1.13:6388 b01ff575c1e793617332d8786c61bb2a3d1bd290

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Removing node b01ff575c1e793617332d8786c61bb2a3d1bd290 from cluster 192.168.1.13:6388

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

[root@192 cluster]#

3、清空6387的槽号,重新分配。当前案例将槽号都分配给6381

[root@192 cluster]# redis-cli -a 111111 --cluster reshard 192.168.1.11:6381 ###

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing Cluster Check (using node 192.168.1.11:6381)

M: 491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

M: 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

M: 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283 192.168.1.13:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

S: 4a60743d7b067dfd03c6a0d4403c88ce173abb1c 192.168.1.12:6384

slots: (0 slots) slave

replicates 491bb2df7fa39bafd917f7aaa0a409e19e41b07f

M: 95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

S: 458cf2edf6cc3005cee4fc3aa93278efa86637ec 192.168.1.13:6386

slots: (0 slots) slave

replicates 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

S: cc9a9f6bad23314263e2b89e4beede4fc59c3ef3 192.168.1.11:6382

slots: (0 slots) slave

replicates 95d8800931e38739a85d6face5296d719a8d5bfa

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 4096###

What is the receiving node ID? 491bb2df7fa39bafd917f7aaa0a409e19e41b07f ###6381的ID

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283 # 6387的ID

Source node #2: done ###这里填done不是all

Ready to move 4096 slots.

Source nodes:

M: 3075d51754554aa421ee23ccbd8e263c0d5c4f0e 192.168.1.12:6383

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

M: 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283 192.168.1.13:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

M: 95d8800931e38739a85d6face5296d719a8d5bfa 192.168.1.13:6385

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

Destination node:

M: 491bb2df7fa39bafd917f7aaa0a409e19e41b07f 192.168.1.11:6381

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

Resharding plan:

Moving slot 6827 from 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

Moving slot 6828 from 3075d51754554aa421ee23ccbd8e263c0d5c4f0e

........

4、删除6387master.命令:redis-cli -a 密码 --cluster del-node ip:端口 6387节点ID

[root@192 cluster]# redis-cli -a 111111 --cluster del-node 192.168.1.13:6387 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Removing node 2f1ed0f391fa1e0a5b3fac8e5d3c669c811ac283 from cluster 192.168.1.13:6387

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

[root@192 cluster]#

注意点

集群也不能保证数据一致性,一定会存在数据丢失的情况。

五、相关命令

info replication:返回关于 Redis 主从复制的详细信息

cluster info:获取 Redis 集群的状态和统计信息

cluster nodes:获取关于集群中所有节点的详细信息。

cluster countkeysinslot <槽位数字编号>:该槽位是否被占用。1占用2未占用

cluster keyslot <keyname> 该key应该存放在哪个槽位上

cluster failover : 将当前redis的身份从slave变成master,原来的master变成slave

不在同一个slot槽位下的多键操作支持不好,通识占位符登场:

不在同一个slot槽位下的键值无法使用mset、mget等多键操作。

可以通过{}来定义同一个组的概念,使key中{内相同内容的键值对放到一个stot槽位去,对照下图类似k1k2k3都映射为x,自然槽位一样。

设置集群是否完整才能提供服务:

默认YES,现在集群架构是3主3从的redis cluster由3个master平分16384个slot,每个master的小集群负责1/3的slot,对应一部分数据。cluster-require-ful-coverage:默认值yes,即需要集群完整性,方可对外提供服务 通常情况,如果这3个小集群中,任何一个(1主1从)挂了,你这个集群对外可提供的数据只有2/3了,整个集群是不完整的,redis 默认在这种情况下,是不会对外提供服务的。

如果你的诉求是,集群不完整的话也需要对外提供服务,需要将该参数设置为no,这样的话你挂了的那个小集群是不行了,但是其他的小集群仍然可以对外提供服务。

评论